Google AI Mode Explained: How It’s Reshaping Search and Content with Real Examples & Tips

by Tapam JaswalIf you’re in SEO or content and have been watching Google’s changes, you already know: AI Mode isn’t a minor tweak, it’s a total shift in how search works.

After deep dives into Doreid Haddad’s “AI Mode, Made Simple”, Mike King’s technical breakdown of AI Mode, and Steve Toth’s curated insights, here’s everything you need to compete and win, from mapping fan-out queries to building content ecosystems and writing with triplet logic.

The New Search Reality

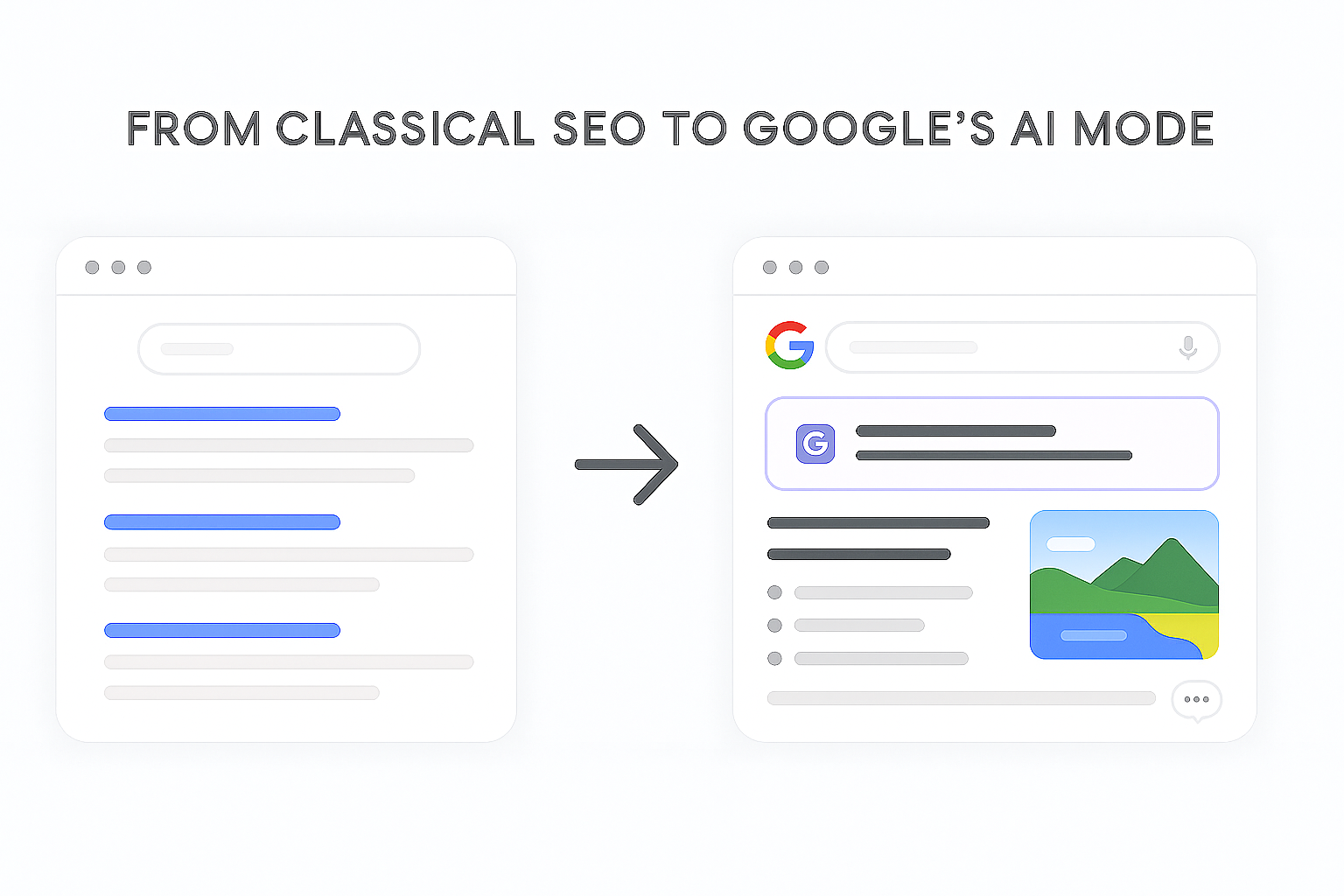

Classic SEO was about getting your page to rank #1 for a keyword, then hoping users would click through and read your full content.

AI Mode changes everything:

- Google now breaks every search into a series of micro-questions (the “fan-out”).

- It compiles answers using passages, tables, visuals, and more, not just blue links.

- It tailors results based on past search behavior, location, and preferences.

- It retrieves up-to-date, real-time information through Retrieval-Augmented Generation (RAG), ensuring responses are timely and contextually relevant.

- Success isn’t determined by ranking first or driving clicks alone; it’s achieved by precisely and effectively answering a user’s specific micro-question exactly when they need that information.

- You succeed not by being #1 overall, but by being the best at answering one specific sub-question for one user at the exact moment they need it.

How Google’s AI Mode Works (Layman’s Terms & Real-World Examples)

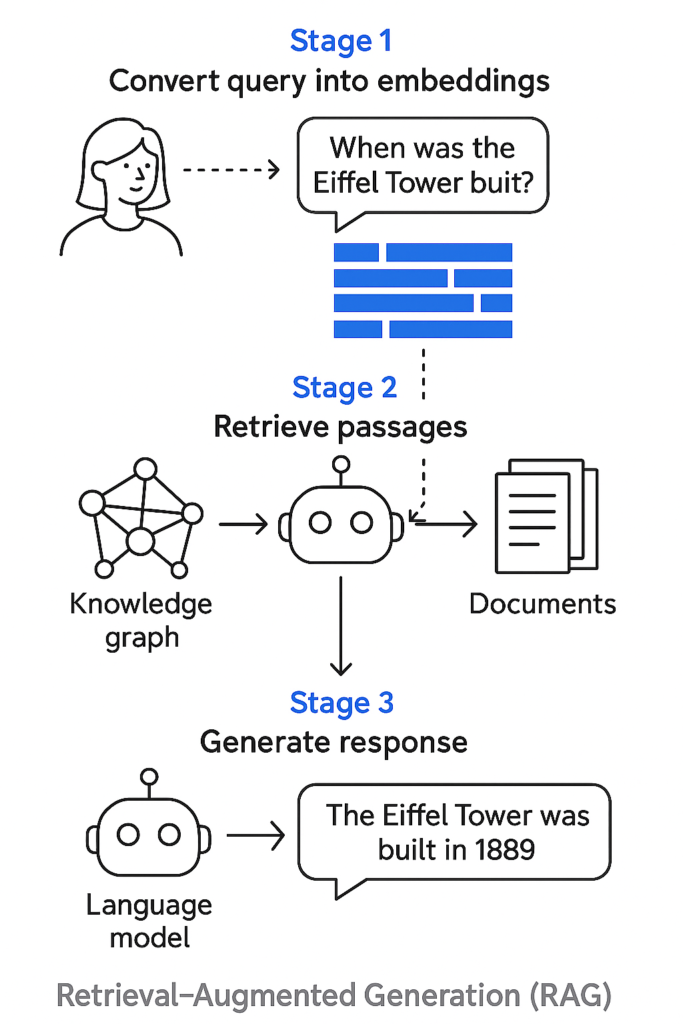

Google’s AI Mode can seem like a black box, but once you understand the process, it’s surprisingly methodical. At its core, AI Mode uses Retrieval-Augmented Generation (RAG). In this two-step process, Google’s AI first retrieves the most relevant pieces of information from across the web. Then it generates an answer by weaving those pieces into a coherent, personalized response.

What is RAG, and how does it work?

Think of RAG like a smart research assistant: it reads the internet on your behalf, grabs the best excerpts, and explains them to you clearly. As described in Mike King’s guide on iPullRank, RAG enhances factual accuracy and contextual depth by combining retrieval with generation, making search responses more grounded and specific.

Instead of relying solely on what it already knows, RAG allows AI to fetch the most relevant data before responding. This improves the factual accuracy, context, and timeliness of the results, a process known as grounding.

RAG works in three stages:

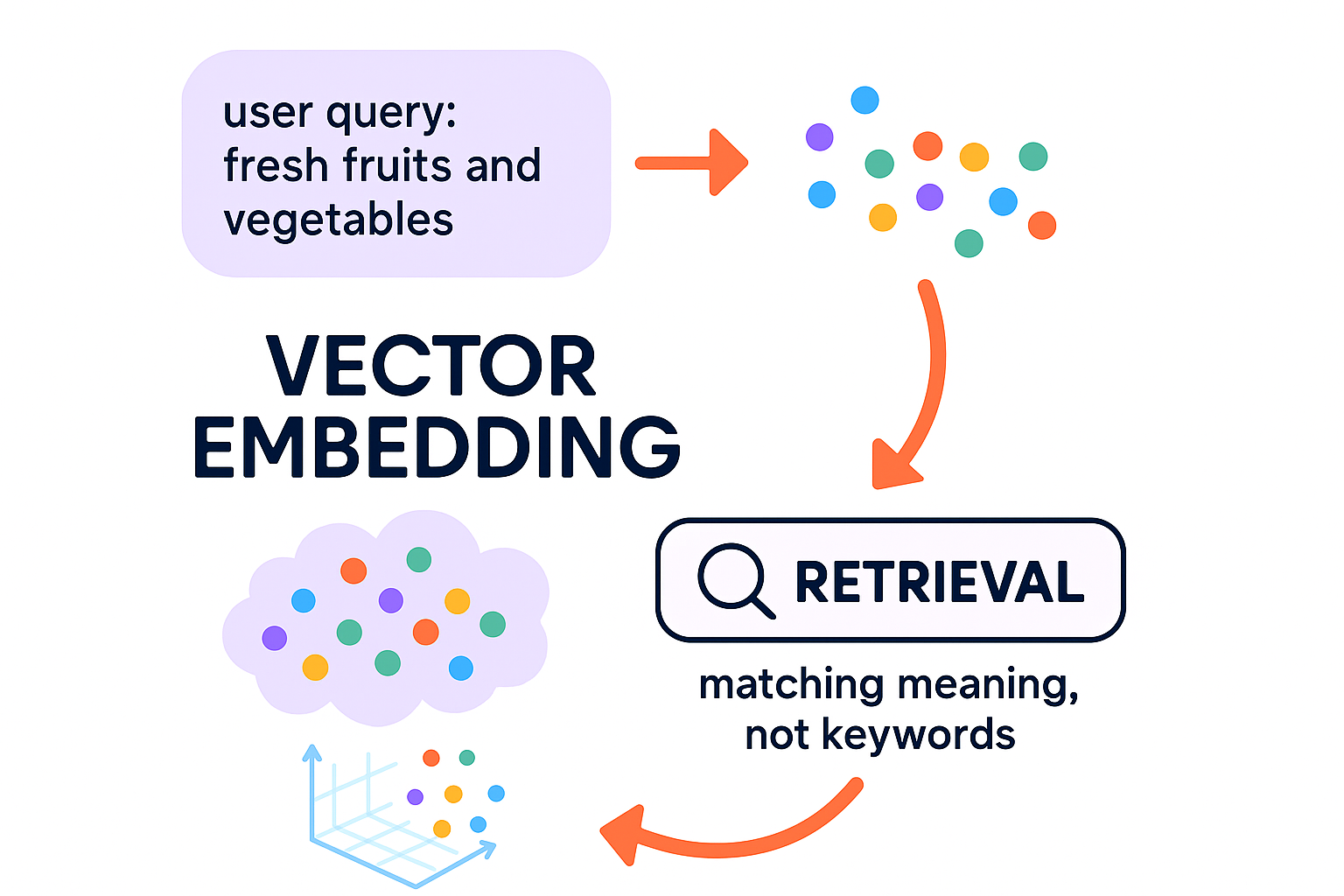

- Input encoder: Converts your search into vector embeddings.

- Neural retriever: Finds passages from knowledge graphs, indexed pages, or shopping graphs.

- Output generator: The LLM (like Gemini or ChatGPT) creates a final answer Refined) from the retrieved data.

This shift also changes what SEO must focus on. Google no longer chooses entire pages; it looks for fragments or “fraggles” that answer micro-questions directly. These are the highlighted sections you see when AI Overviews link to specific parts of a page. To compete, your content needs to produce standout passages, not just optimize pages.

RAG’s use of knowledge graphs further enhances contextual understanding. For example, Google’s Shopping Graph helps refine results for queries like “best hiking boots for the Pacific Northwest,” factoring in waterproofing, climate suitability, and user reviews.

While RAG is still evolving, and over 60% of AI-generated answers are currently inaccurate, its capabilities are improving fast. SEOs must adapt now by focusing on clarity, passage-level optimization, and real-time relevance.

For a detailed breakdown of how RAG is reshaping search and SEO, check outFrancine Monahan’s excellent article: How Retrieval‑Augmented Generation Is Redefining SEO.

Traditional SEO vs. Google’s AI Mode

Old Way:

Search “best beginner DSLR,” see a list of links, open five tabs, and compare.

AI Mode:

Google breaks your query into micro-questions like:

- “Which camera is the lightest?”

- “Best under $500?”

- “Good for low-light photography?”

Rag finds the most relevant, clear paragraphs, tables, and lists from around the web for each and assembles a personalized answer just for you.

Example (Real-World):

I search: “Best laptop for graphic design students.”

Google might pull a list comparing “MacBook Air vs Dell XPS: battery life, weight, and screen quality.”

It could also include:

- A quote from a Reddit or student design forum about usability

- A product table showing screen resolution and battery life

- A visual or list highlighting which laptop is preferred for digital illustration or animation

- A comparison passage like: “MacBook Air excels in portability and color accuracy, while Dell XPS 15 offers more raw power for multitasking.”

This is AI Mode’s reasoning chain in action: it gathers specific fragments that each answer a sub-question, like price, weight, or battery life, rather than evaluating entire pages. These fragments are then processed by an LLM (like Gemini or ChatGPT), which synthesizes them into a final, well-rounded response tailored to the user’s query.

Why Classic SEO Alone Doesn’t Work Anymore

1. Being #1 is Not Enough:

You win by writing clear, focused mini-answers to real micro-questions, not by just having a massive post that “ranks.”

Example: Instead of a giant post on “healthy breakfasts,” include a bite-sized passage: “High-protein breakfast for gym goers: Greek yogurt parfait with nuts and seeds.”

That 25-word snippet is what Google might choose for the AI answer – even if you’re not #1 overall.

2. Results Are Personalized:

Two people searching for “easy pasta” get different results:

One sees vegan pasta ideas (because they read vegan blogs).

Another sees Alfredo’s recipes (because they watch cooking YouTube channels).

3. Google Pulls from All Over:

Passages, tables, charts, video transcripts, even podcast quotes, anything that answers the micro-question best.

Step-by-Step Guide to Optimizing for Google’s AI Mode

1. Map the Fan-Out: Cover Every Micro-Question

Google’s AI instantly explodes every head term into dozens of micro-questions, known as “fan-out queries.” These micro-intents reflect the different angles and contexts users bring to a topic. If you’re not answering these, you’re unlikely to appear in AI Overviews.

For example:

- “Best DSLR under $700” (budget-focused)

- “Best lightweight DSLR for beginners” (user type-specific)

- “Top DSLR brands in 2025” (brand-centric)

Each of these sub-questions is an opportunity to surface in an AI Overview.

🔍 Use Qforia by iPullRank to discover fan-out questions at scale and fill your gaps strategically.

Table – Action:

- Use tools like AnswerThePublic, Perplexity, Gemini, Google Search Console, and Google’s “People Also Ask.”

- For each topic, list all plausible micro-questions and who’s asking them.

- Create or refactor passages to answer everyone.

- Use the fan-out queries strategically: you can either weave them into new passages within the same page (when contextually relevant) or create dedicated pages if the query deserves deeper treatment. Refer to real data tools like Qforia to identify missed micro-intents and systematically map them to your content ecosystem.

2. Write “Mini-Answers” with RDF-Triplet Logic

Google’s LLMs love clear, answer-first sentences, often in “triplet” form (subject–predicate–object).

Triplet Examples:

- “The 2024 EcoCar gets 55 MPG, according to EPA data.”

- “Pizza Town delivers in 30 minutes across Brooklyn.”

- “Notion supports unlimited pages for all users.”

Tips:

- Every mini-answer starts with the answer/stat.

- Include the brand, product, or entity name.

- Keep it concise, ideally under 40 words.

- Cite a credible source if possible.

- Zero fluff: Cut all unnecessary words.

3. Build Content Ecosystems, Not Just Pages

Google’s AI Mode is multimodal; it pulls from articles, videos, podcasts, and infographics. If you don’t provide these assets, Google will quote or summarize someone else’s.

Rule of Three for Every Key Topic:

- Authoritative Article:

“Ultimate Guide to Hybrid Bikes for Commuters” - Visual Asset:

Infographic: “Hybrid vs. Road vs. Mountain Bikes” - Short Video or Audio Clip:

60-second review, or a podcast snippet (with a transcript!)

How to Implement:

- Add schema markup (FAQ, Video, Review).

- Use descriptive alt-text and captions.

- Internally link between formats and related answers.

4. Optimize Internal Linking and Entities

- Use intent-matching anchor text (“compare trail shoes,” “battery life tests”).

- Always reference brands, products, or entities exactly as they’re listed in Google’s Knowledge Graph.

- Every passage should link to a related deep dive or supporting page.

- Use author bios, about pages, and entity markup.

5. Track & Test: AI Mode Visibility > Old Rankings

Don’t just watch your classic rankings anymore – track how your passages and assets show up in AI Overviews and AI-generated results.

How to Test:

- Try personalized searches with logged-in Google accounts from different locations and devices.

- Use tools like Gemini and Perplexity to see if your content appears in AI answers.

- Update your content briefs: Add a “Fan-Out Audit” column – track which micro-questions/persona angles you’re covering (or missing).

- Monitor brand mentions: Unlinked brand mentions can be just as valuable as backlinks in the AI era.

More Pro Tips for AI-Mode SEO Success

A. Embrace Semantic Triplets

Google’s AI loves clear, “triplet” sentences (subject–predicate–object), making your answers easy to quote.

Template:

- [Product/brand/feature] + [does what/how] + [with result/stat/benefit].

Example:

- “The Apple Watch Series 9 tracks sleep cycles with 95% accuracy, according to Sleep Foundation data.”

- “Rivian R1S offers 316 miles of range, excelling at off-road capability; Tesla Model X offers superior tech but lags on range.”

Why?

Triplets make your content both human-friendly and LLM-friendly. Moreover, using triples

B. Cover Personas, Not Just Keywords

AI Mode doesn’t show the same answer to everyone. Make sure your content addresses different user scenarios and needs.

Examples:

- “Best savings account for freelancers”

- “Standing desk recommendations for tall users”

- “Budget laptops for university students”

How:

- Add persona-specific sub-sections or FAQs.

- Test searches for different user types.

C. Use Non-Text Formats (Tables, Lists, Video, Audio)

AI Mode loves tables, lists, charts, and videos.

Examples:

- Table: Compare HelloFresh vs Blue Apron vs EveryPlate on price, dietary options, and portion size.

- Chart: “Hybrid bikes vs road bikes: comfort, speed, cost.”

- Video: 30-second review, with a transcript for SEO.

D. The “Rule of Three” (Content Ecosystem)

For every high-value topic:

- One authoritative article

- One visual asset (infographic, chart, table)

- One rich media piece (video, podcast, or audio clip with transcript)

FAQs: Google’s AI Mode & Content Strategy

What is Google’s AI Mode, and how is it different from classic search?

Google’s AI Mode transforms a single query into dozens of related micro-questions, then builds a personalized answer from the best passages, tables, visuals, and lists across the web. Unlike classic search, it’s not about ranking first—it’s about being the best response for a specific intent.

How can I ensure my content appears in AI Overviews?

Write mini-answers that stand alone. Use semantic triplets and start with the answer. Mention brands, products, and entities. Add tables, charts, schema, and FAQs. Cover all user intents and persona angles.

Does Google AI Mode reward long content?

No, but it does reward in-depth, well-researched content. Google prioritizes clear, standalone passages under 40 words that directly answer a micro-question. It’s about precision, not padding.

How do I know if my content is “AI Mode-ready”?

Your content is AI Mode-ready if each paragraph stands alone, delivers a clear answer, and fits naturally within a reasoning chain. Use semantic triplets, for example, “Notion [subject] allows unlimited pages [predicate] on all plans [object].” Ensure your writing is concise (ideally under 40 words), fact-based, and formatted for clarity.

Key traits to check:

- Paragraphs answer one specific micro-question.

- Each section includes a stat, example, or named entity.

- Visuals or structured data enhance depth where useful.

- Content fragments make sense out of context and can be quoted directly by LLMs.

How do I optimize for “hidden” or “fan-out” queries

- Identify trade-offs, comparisons, and persona twists.

- Use fan-out tools like Qforia or Answer the Public.

- Expand briefs with missed micro-intents.

- Add targeted visuals or paragraphs for each variation.

What are semantic triplets, and why do they matter?

Semantic triplets are clear statements in subject–predicate–object form. For example: “Rivian R1S [subject] delivers 316 miles of range [predicate] per full charge [object].” These formats are easier for AI to extract and quote, helping your snippet win in AI Overviews.

What is RAG (Retrieval-Augmented Generation), and how does it work?

RAG powers AI Mode by retrieving relevant content first, then generating a personalized answer. It works in three steps:

- Embeddings: Google converts your query into vector embeddings (see Google’s explanation).

- Retrieval: It finds the most relevant passages from knowledge sources.

- Generation: It uses those results to write a helpful, grounded response.

This method makes responses more accurate and contextual. Francine Monahan’s full breakdown dives deeper into how RAG works and why it matters for SEO.

Are AI Overviews always correct?

Not yet. Over 60% of AI-generated answers have been shown to contain factual errors (source). That’s why Google emphasizes grounding and retrieval. SEOs should help improve accuracy by offering clear, high-trust snippets with real data, brands, and sources.

What are vector embeddings?

Vector embeddings are numerical representations of data, like text, video, or images, converted into coordinates in a multi-dimensional space. They help AI understand and compare content based on meaning rather than just keywords.

Final Action Checklist

- Add a Fan-Out Audit for every key topic.

- Use Queries from GSC as well since GSC started giving from AI mode/overviews.

- Use triplets, data, and entities in every passage.

- Think in ecosystems: article + visual + video/audio.

- Cover all persona angles (not just keywords).

- Track and test for AI Overview and answer box visibility, not just classic rankings.

- Build brand mentions and visibility through PR, partnerships, and earned media.

- Proactively engage on social media and in online communities like Reddit and Quora—these channels increasingly influence LLM training data and model outputs.

Want to Go Deeper?

📚 Read Doreid Haddad’s full breakdown on AI Mode

📓 See Steve Toth’s summary of Mike King’s guide

Big thanks to @Doreid Haddad, @Michael King, and @Steve Toth for their clear, practical, and genuinely useful research.

If you’re creating content or have questions about AI search, we’d love to hear from you, just get in touch.

- About the Author

- Latest Posts

I’m a part of CueForGood’s SEO team. I love watching Football and Anime.

-

How to Market Vegan Products Without Preaching (or Losing Sales)

by Tapam Jaswal

Marketing vegan products isn’t just about talking to people who already follow a vegan lifestyle. It’s also about connecting with …

Continue reading “How to Market Vegan Products Without Preaching (or Losing Sales)”

-

Vegan SEO: Optimizing Organic Visibility for Purpose-Driven Brands

by Tapam Jaswal

More people than ever are interested in vegan products and services. If you run a vegan brand, ensuring customers can …

Continue reading “Vegan SEO: Optimizing Organic Visibility for Purpose-Driven Brands”

-

Google AI Mode Explained: How It’s Reshaping Search and Content with Real Examples & Tips

by Tapam Jaswal

If you’re in SEO or content and have been watching Google’s changes, you already know: AI Mode isn’t a minor …

-

Jiva’s Organic Traffic Growth: 354% Surge in 6 Months | CueForGood

by Nida DanishSummary: Jiva’s efforts to empower smallholder farmers weren’t gaining the digital traction they deserved. With a strategic overhaul led by …

Continue reading “Jiva’s Organic Traffic Growth: 354% Surge in 6 Months | CueForGood”

-

What We Learned When We Switched From Disposable Tissues to Reusable Napkins

by Nida DanishAt CueForGood (CFG), we’ve embraced a refreshing change: reusable cloth napkins. While the switch may seem minor, it’s rooted in …

Continue reading “What We Learned When We Switched From Disposable Tissues to Reusable Napkins”

-

Of Light, Laughter & Transformation: Diwali 2024 at Cue For Good

by Nida Danish

On any given day, walking into the Cue For Good office feels like stepping into a space with heart. It’s …

Continue reading “Of Light, Laughter & Transformation: Diwali 2024 at Cue For Good”

One Reply to “Google AI Mode Explained: How It’s Reshaping Search and Content with Real Examples & Tips”